It sounds like a contradiction, doesn't it? But I don't suggest that artificial intelligence (AI) itself is doing the damage. Rather, it's the exponentially growing demand for compute – the raw infrastructure that powers AI – and the lack of equitable access to that essential resource. In other words: no compute equals no innovation for far too many organizations, large and small (yes, despite DeepSeek and Stargate). That has to change.

First, let's take a step back. Innovation wasn't always this gated – and that was a really good thing.

Consider the Internet, a ubiquitous technology whose ubiquity was far from assured. When we think back to the 1940s, computers alone cost hundreds of millions, if not billions, of dollars, could only be operated by the US Government, and barely fit into a single room! The prevailing opinion, even by the late 1970s, was that this technology would be reserved for the very few – a sentiment expressed by DEC founder Ken Olsen when he infamously postulated, "There is no reason for any individual to have a computer in their home."

What changed? Economics. Demand increases and mass adoption is made possible when the price of a product's complementary components (or "complements") decreases.1 IBM commoditized computer hardware, putting a PC in every home and a spoiler on Ken Olsen's prediction. Intel drove down the cost of processing power with the x86. Ethernet cable standardization from Bob Metcalfe drove down networking prices and created the open standard for connectivity.

For the Internet, letting the free and fair market grow through openness and standardization rapidly transformed the world and made global connectivity possible. The secret for each of these complementary innovations wasn't about control, it was about removing constraints.

But now, as we move deeper into the age of machine learning, AI innovation is choking on its core complements: data and compute. Innovation requires vast computational and data resources. And that compute, the fuel that powers this new era, isn't just expensive; its capacity is constrained and it's concentrated under the control of the hands of a select few. Even those select few suffer from similar cost and capacity constraints, as the Stargate announcement makes abundantly clear.

In this world – where cost, capacity and control are constrained – innovation gasps.

For the Internet, letting the free and fair market grow through openness and standardization rapidly transformed the world and made global connectivity possible. The secret for each of these complementary innovations wasn't about control, it was about removing constraints.

The massive demand for (and concentration of) compute

Despite the recent falling cost of GPU infrastructure, the cost of access will continue to rise. Researchers from Stanford University and nonprofit research institute Epoch AI have found that the cost of the computational power required to train AI models is doubling every nine months.3 We've heard Sam Altman describe "compute as the currency of the future." If AI proliferates as widely as folks like Sam project, consider the following back of the envelope math: supporting every global working age person (age 15-64) with one H100 (which consumes ~1.2 kW) would cost nearly $100 trillion at today's prices. That would be nearly thirty times our global oil demand4 – and Stargate's $500B would amount to <1% of what is needed!

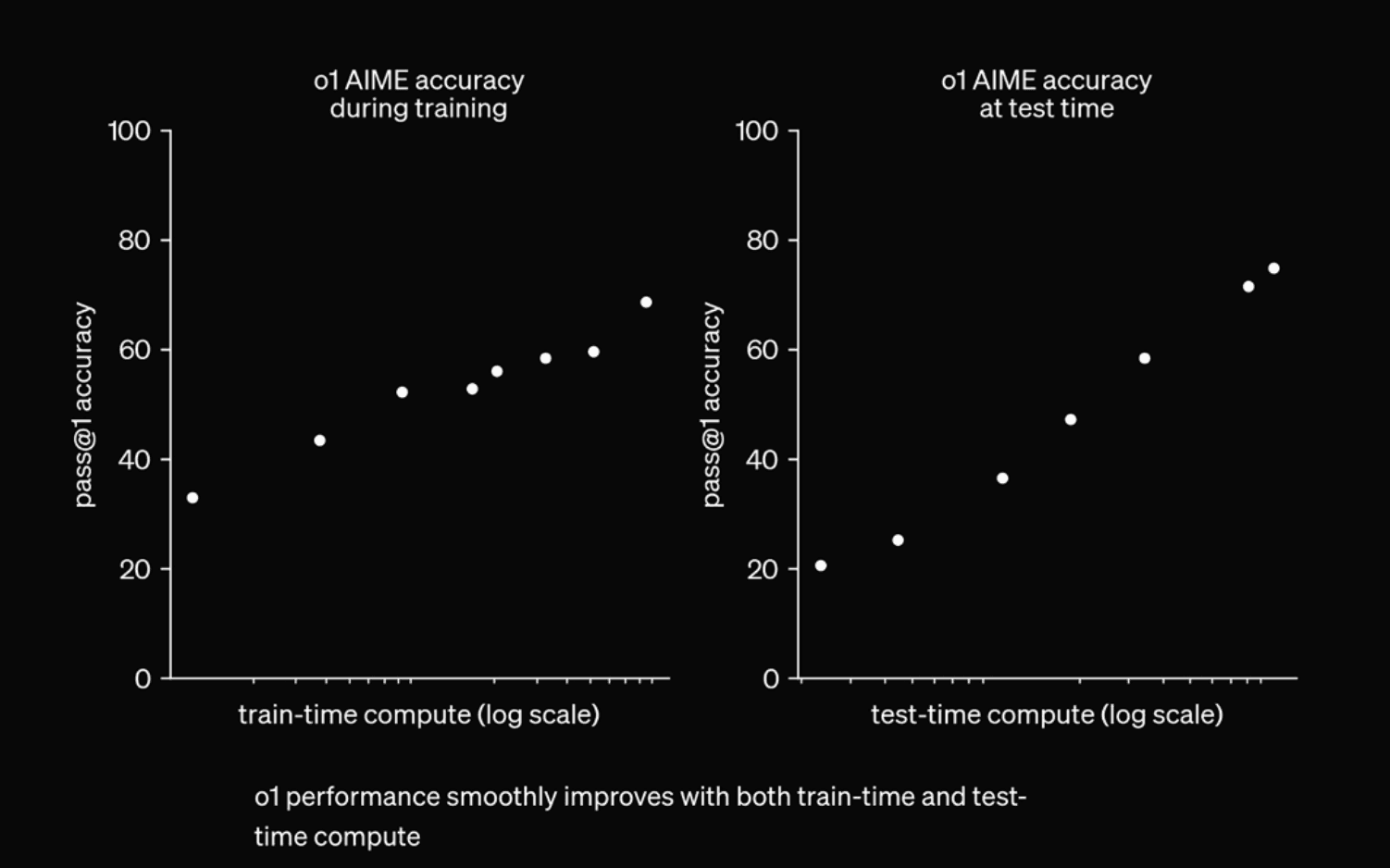

We are extracting even more intelligence per dollar due to research…which in turn unlocks more cases for AI…which generates more demand…which drives up AI spending. As part of OpenAI's "Shipmas," they released their o1 reasoning model swiftly followed by announcing their o3 model. Both prove the returns that come from test-time compute scaling (e.g. improving accuracy by spending more time on compute at inference time) are immense, and that we've entered a new paradigm.5 While I wish I had the space to write a detailed post about the stepwise improvement of these reasoning models, the main takeaway is this: OpenAI has shown that quality improves with more compute!

Case in point: o3 scored 75.7% on the ARC-AGI-1 Public Training set for the Semi-Private Evaluation set. with a $10k compute limit and a whopping 87.5% on the high-compute (172x) configuration.6 This is great news, right!? Wrong. AI innovation is killing itself because the cost of compute for this task comes in at the equivalent of $17-20 per task in the low compute mode; whereas, the cost of a human to do the same task would likely come in at one-quarter that cost, or $5. The cost will likely come down, but only until the next improvement (when it increases again!). And only time will tell if the cost will ever come down enough to be competitive with a human.

But what about DeepSeek, you ask? While many of DeepSeek's claims are still being investigated and validated, it does represent a potential big breakthrough. To avoid adding noise to the many perspectives out there, I'll briefly say that I see DeepSeek serving as a call to foster continued competition towards even more innovation in AI. We know that reasoning models like o1/3 and R1 benefit from more test-time compute, and with the claimed innovations by DeepSeek, we're now entering an era where compute isn't used primarily for model training; it's also used for creating better data! The improvements in intelligence are going to come not from putting in more data (scraped from the internet) but rather from putting in more compute (to generate higher-quality data).

That said, regardless of the efficiency gains DeepSeek may deliver, demand in the GPU market is still outpacing supply by an order of magnitude. More efficient compute doesn't mean more compute won't be useful or required. So the challenge – and opportunity – remains. Harkening back to the IBM and Microsoft analogies…if the product of AI is intelligence, then we need to reduce the cost of its complements (compute and data). The more we can decrease the cost of the latter, the more demand we'll have for the former.

But that's just not happening as it should. In fact, quite the opposite.

Control and influence over compute is already concentrating among a few very well-resourced organizations. Only 45% of all new domestic GPUs are being consumed by startups, with the number trending down by the day. Basically, unless your name is Google, Amazon, Microsoft or Meta – or if you've got funding like OpenAI and Anthropic – you've got to fight for your share.

Imagine: a world where only a handful of organizations dictate not just the market, but the future of everything from the macro (like energy and automated transportation) to the micro (like booking a vacation or hitting up the drive-thru).

From bare metal to black box

In my view, we need to democratize the AI tech stack – from silicon to servers, data centers to virtualization and the cloud, all the way to AI development platforms. We already see several excellent companies doing this work. Groq, for example, is innovating at the silicon level. Lambda is making tremendous strides at the cloud level. But no one gets all the way down to the root of the problem: compute.

Bluntly, the system for buying compute…DOES NOT COMPUTE.

Lack of market transparency DOES NOT COMPUTE

The reality most people don't see is that compute buyers are flying blind. There's no real market price – access to the same GPU service can range from $1.58 to >$8.50 per hour. When you do secure access, you have no way of knowing if it's a good deal or if the technology will even work.

Vendor lock-in DOES NOT COMPUTE

Long-term contract lock-ins force you to bet on your future needs, often leading to paying premiums or being stuck with unused capacity.

Managing multiple vendors DOES NOT COMPUTE

Different providers, different contracts, different tech stacks…it's like trying to run your workloads across a dozen incompatible ecosystems.

Unused capacity DOES NOT COMPUTE

Pessimists believe that 10% of compute is actually effectively utilized. I'm not that negative; but I'd say 50%. Even if we were at 99% utilization in the most optimistic scenario, we're still talking about waste on the order of trillions of dollars! To me, that's unacceptable.

Heterogeneity DOES NOT COMPUTE

One of the biggest reasons we have such under- and poorly utilized capacity is because of the heterogeneity of compute. An H100 chip is an H100 chip until the moment you put it inside someone's proprietary cloud. The trouble is, as you scale and look to migrate vendors to meet your needs, you're going to spend a ton of money moving from cloud to cloud. And, eventually, you're going to get stuck in some vendor's cloud where they can (and probably will) jack up the prices on you.

The compute providers themselves aren't faring much better. They've had to build expensive orchestration systems to compete – without upfront guarantees of revenue. When demand fluctuates, GPUs sit idle, burning capital and eroding profitability. Overbuilding to anticipate demand, only to face market downturns, creates massive inefficiencies that inflate costs for everyone and lock smaller players out of the market entirely.

Here's where commoditization could be transformative for access to raw computing power. In fact, the innate properties of compute naturally lend themselves to standardization and liquid markets:

- Compute exhibits uniformity as AI's foundational infrastructure, analogous to power transmission in electricity

- Compute represents an essential raw material for model development

- Compute commands a massive market with capital expenditures in the trillions already

- Compute demonstrates minimal differentiation in core capabilities across providers (core meaning the chip itself, put inside of a DC, with a bare-bones software orchestration system)

What would I propose? We need neutrality. We need a system that injects transparency and liquidity into the process. In sum, it's time for a market that lets buyers access compute when and where they need it, while enabling providers to monetize unused capacity efficiently.

We need a system that injects transparency and liquidity into the process

I've been fortunate to be at the frontlines of AI innovation, helping launch the first AI research lab in Japan; as one of the leaders of AI infrastructure at Apple; delivering vital compute resources at Lambda; and now once again as a founder myself. So, I get it – the complexity can feel overwhelming and be stifling to innovation. That's why my colleagues and I have launched the Compute Exchange.

Our aim is nothing short of injecting clarity, competition and trust into a system that's overdue for change. The Compute Exchange is an open, neutral platform where buyers and sellers meet on equal terms, with transparency baked in from the start. Here's how it works:

Market-Driven Pricing

Like a stock or derivatives market, we use digital auctions to ensure prices reflect real demand, not arbitrary premiums. Buyers bid, suppliers compete. Prices drop or rise to where they should be. Our novel matching algorithm concentrates liquidity across a variety of heterogeneous elements to enable a powerful, performant exchange.

Standardization, Utilization and Seamless Migration

No more dealing with multiple different terms of service (we have just one across all providers), or varying policies when it comes to resale, data egress costs, and more. We are building towards one open standard for global computing that will drive utilization rates up and complexity way down.. In turn, we'll enable seamless live workload migration across different providers to make it even easier for you to have true optionality.

Flexibility and Risk Mitigation

Whether you need compute for a week or a year, you only pay for what you need – and you can resell unused capacity. Over time, we'll also enable risk management through derivatives products that let buyers "hedge" their compute needs. Think of it like a "compute battery" that you can charge up and down seamlessly when you need more compute or want to offload capacity.

Governance

Access to compute isn't just about tech. It's about policy, too. That's why we're working to create a self-regulated market with global interoperability and quality standards. Over time, this self-governing system will enable us to provide the first objective quality rating for compute (akin to a Moody's scorecard).

Sustainability

Given the voracious energy consumption required for compute, we're also bringing sustainability into the equation. Buyers can see the environmental impact of their compute and choose carbon-efficient/carbon offset options.

Public Policy

We advocate for policies that prohibit data egress fees, mandate interoperability standards and support public-private partnerships to ensure equitable access to compute. By collaborating with lawmakers, industry leaders and researchers, Compute Exchange seeks to create a fairer, more open AI ecosystem. Imagine players big and small getting a chance to benefit from the $500B Stargate project.

We're at an inflection point. We can move forward with the biased, black-box approach to compute and stifle inspiration and innovation. Or we can do what entrepreneurs do best: bring Darwinian market forces to this system, where innovation thrives because competition drives prices down, quality up, and progress forward. This is why I see DeepSeek as a call for more competition and innovation, and Stargate as an opportunity for OpenAI, Softbank, and Oracle to provide massive computing resources to the entire American AI ecosystem, not just themselves.

If we are to realize the transformational potential of AI for our world, a neutral, transparent market isn't just a business solution. It's a responsibility and an imperative. Remember, the more we can decrease the price of intelligence's complements – compute and data – the faster AI will spread and the more impactful it will be.

Whether you're a compute buyer or seller, a researcher, or someone who believes that innovation shouldn't be brokered by a select few…join us. Register for an auction, or request a demo. Together, we can build the infrastructure for the future of intelligence.

Or we can do what entrepreneurs do best: bring Darwinian market forces to this system, where innovation thrives because competition drives prices down, quality up, and progress forward.

Sources

- https://www.joelonsoftware.com/2002/06/12/strategy-letter-v

- https://openai.com/12-days/

- https://time.com/6984292/cost-artificial-intelligence-compute-epoch-report/

- The Post AGI Economy, Donald Wilson

- https://stratechery.com/2024/openais-new-model-how-o1-works-scaling-inference/

- https://arcprize.org/blog/oai-o3-pub-breakthrough